NetBoot Part 4

Monday, March 31, 2008I'm also getting fairly adept at making packages. A good many of my packages are just scripts that make settings to the system, so I'm getting pretty handy with the bash and quite intimate with dscl. But, perhaps most importantly, I'm learning how to make all sorts of settings in Leopard via the command-line that I never knew how to do.

The toughest one so far has been file sharing. In our lab we share all our Work partitions to the entire internal network over AFP and SMB. In the past we used SharePoints to modify the NetInfo database to do so, but this functionality has all been moved over to Directory Services. To complicate matters, SAMBA no longer relies simply on standard SMB configuration files in standard locations, and the starting and stopping of the SMB daemon is handled completely by launchd. So figuring this all out has been a headache. But I think I've got it!

Setting Up AFP

Our first step in this process is setting up the share point for AFP (AppleFileshareProtocol) sharing. This wasn't terribly difficult to figure out, especially now that I've been using Directory Services to create new users. To create an AFP share in Leopard, you use dscl. Once you grok the syntax of dscl it's fairly easy to use. It basically goes like this:

command node -action Data/Source valueThe "Data Source" is the thing you're actually operating on. I like to think of it as a plist entry in the database — like a hierarchically structured file — which it basically is, or sometimes I envision the old-style NetInfo structures. To get the needed values for my new share, I used dscl to look at a test share I'd created in the Sharing Preferences:

dscl . -read SharePoints/TESTThe output looked like this:

dsAttrTypeNative:afp_guestaccess: 1

dsAttrTypeNative:afp_name: TEST

dsAttrTypeNative:afp_shared: 1

dsAttrTypeNative:directory_path: /Volumes/TEST

dsAttrTypeNative:ftp_name: TEST

dsAttrTypeNative:sharepoint_group_id: XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXX

dsAttrTypeNative:smb_createmask: 644

dsAttrTypeNative:smb_directorymask: 755

dsAttrTypeNative:smb_guestaccess: 1

dsAttrTypeNative:smb_name: TEST

dsAttrTypeNative:smb_shared: 1

AppleMetaNodeLocation: /Local/Default

RecordName: TEST

RecordType: dsRecTypeStandard:SharePointsOkay. So I needed to use dscl to create a record in the SharePoints data source with all these values. Fortunately, the "sharepoint_group_id" is not required for the share to work, because I'm not yet sure how to generate that number. But create the share with all the other values and you should be okay:

sudo dscl . -create SharePoints/my-share

sudo dscl . -create SharePoints/my-share afp_guestaccess 1

sudo dscl . -create SharePoints/my-share afp_name My-Share

sudo dscl . -create SharePoints/my-share afp_shared 1

sudo dscl . -create SharePoints/my-share directory_path /Volumes/HardDrive

sudo dscl . -create SharePoints/my-share ftp_name my-share

sudo dscl . -create SharePoints/my-share smb_createmask 644

sudo dscl . -create SharePoints/my-share smb_directorymask 755

sudo dscl . -create SharePoints/my-share smb_guestaccess 1

sudo dscl . -create SharePoints/my-share smb_name my-share

sudo dscl . -create SharePoints/my-share smb_shared 1This series of commands will create a share called "My-Share" out of the drive called "HardDrive."

After modifying the Directory Services database, it's always smart to restart it:

sudo killall DirectoryServiceAnd we need to make sure AFP is running by starting the daemon and reloading the associated Launch Daemons:

sudo AppleFileServer

sudo launchctl unload /System/Library/LaunchDaemons/com.apple.AppleFileServer.plist

sudo launchctl load -F /System/Library/LaunchDaemons/com.apple.AppleFileServer.plist

Not the easiest process, but not too bad. SMB was much tougher to figure out.

Setting Up SMB

Setting up SMB works similarly, but everything is in a completely different and not-necessarily standard place. To wit, Leopard has two different smb.conf files: one that's auto-generated (and which you should not touch) in /var/db, and one in the standard /etc location. Fortunately, it turned out, I didn't have to modify either of these. But still, it led to some confusion. The way SMB is managed in Leopard is rather roundabout and interdependent. Information about SMB share is stored in flat files — one per share — in /var/samba/shares. So, to create our "my-share" share, we need a file named for the share (but all lower-case):

sudo touch /var/samba/shares/my-shareAnd in that file we need some basic SMB info to describe the share:

path=/Volumes/HardDrive

comment=HardDrive

usershare_acl=S-1-1-0:F

guest ok=yes

directory mask=755

create mask=644

Next — and this was the tough part to figure out — we need to modify one, single, very important preference file that basically informs Launch Services that SMB should now be running:

sudo defaults write /Library/Preferences/SystemConfiguration/com.apple.smb.server "EnabledServices" '(disk)'

This command modifies the file com.apple.smb.server.plist in our /Library/Preferences/SystemConfiguration folder. That file is watched by launchd such that when it is modified thusly, launchd knows to start and run the smbd daemon in the appropriate fashion. Still, for good measure, I like to reload the LaunchDaemon for the SMB server by hand. Don't need to, but it's a nice idea:

sudo launchctl unload /System/Library/LaunchDaemons/com.apple.smb.server.preferences.plist

sudo launchctl load -F /System/Library/LaunchDaemons/com.apple.smb.server.preferences.plist

That's pretty much it! There are a few oddities: For one, the new share will not initially appear in the Sharing Preferences pane, nor will the Finder show it as a Shared Folder when you open the window.

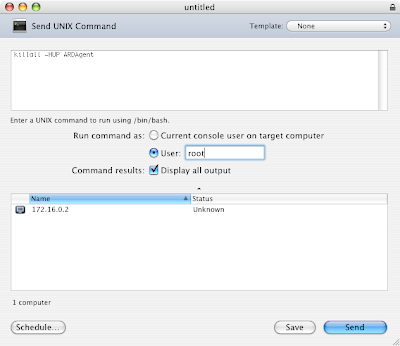

(click image for larger view)

But the share will be active, and all will be right with the world after a simple reboot. (Isn't it always!) Also, if you haven't done it already, you may have to set permissions on your share using chmod in order for anyone to see it.

I was kind of surprised at how hard it was to set up file sharing via the command-line. But I'm glad I stuck with it and figured it out. It's good knowledge to have.

Hopefully someone else will find it useful as well.